I have long been influenced by 2 things: Pokémon and programming. It was actually Pokémon that made me interested in becoming a software developer, and one of my first websites was about Pokémon.

Project EyeZard is a computer vision and TTS (text-to-speech) project aimed at reading out what's going on in Pokémon VGC battles on Nintendo Switch (currently Pokémon Sword and Pokémon Shield). Though it may become something more than that.

I can't say I'm too surprised that I'm working on a Pokémon project. But how did I end up on the path of making a Pokémon screen reader? And what have I done so far?

Let me tell you my story. It'll be a quick run-through for right now.

The Backstory

Despite playing my way through the main series since Pokémon Red, it wasn't until 2019's Pokémon Sword and Shield versions that I became aware of Pokémon VGC. Pokémon VGC (Video Game Championships) is the official competitive battling format of the Pokémon main series games.

While I learned on and off through the early pandemic, it was November of 2020 when I ran into a post for help on r/VGC:

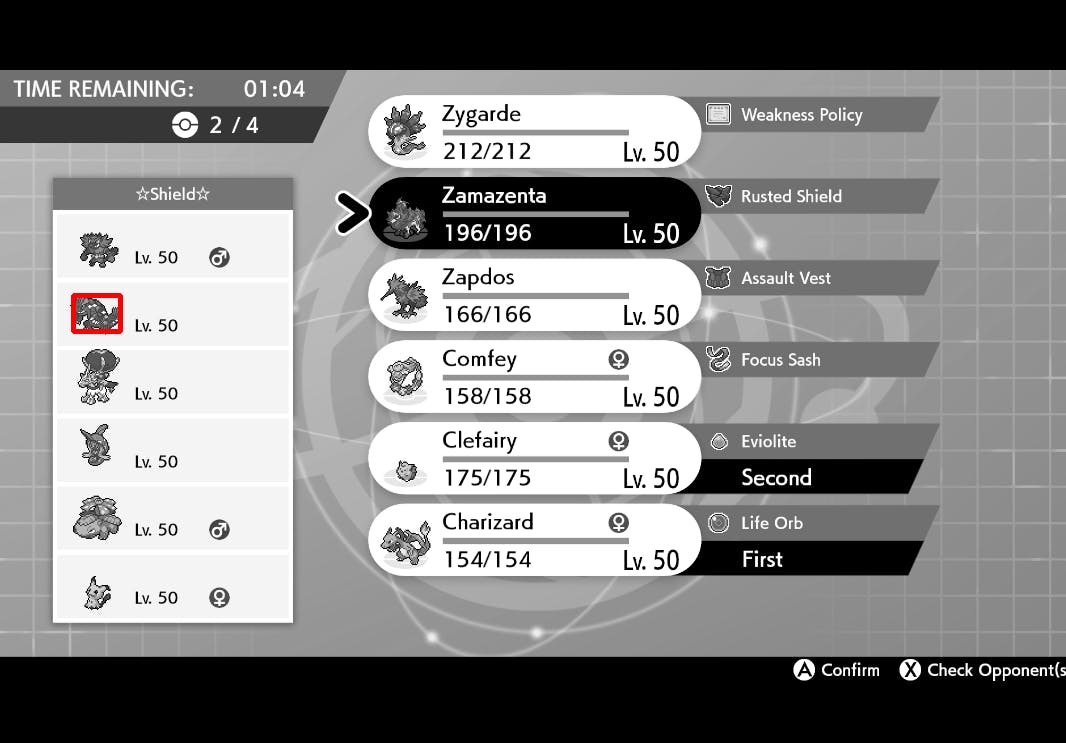

Hello everyone. I am a blind person who plays Pokémon using in game sounds and a screen reading app on my phone to read on screen text. I have come to a problem When it comes to ranked battles. I cannot see the opponents team because as far as I can tell the names of the Pokémon are not listed, just the graphic of the opponents Pokémon. So there’s no way for me to select the four ideal Pokémon from my team for a specific team.

Ever since I read that thread, it has repeatedly reappeared at the back of my mind, floating around. I thought it was incredibly cool that a phone could help a blind person play. The limitation there was really unfortunate though.

I wondered, "What would be the way to get around that?" But I didn't even know where to start. The only way I could think to immediately take from this was to make any of my website projects as accessible as possible.

I know software was very helpful to me during the years I struggled with RSI (Repetitive Strain Injury) in my hands and wrists. During this time, I managed to find software to create voice commands that triggered actions I could program myself. The issues I faced here probably helped me to more easily understand the importance of good accessibility, though I only learned so much about it.

It was in March and April of 2022 that I began looking into accessibility more deeply. It all started with an accessibility presentation, which gave a ton of helpful resources. I also had a coffee chat with a software developer who turned out to be an accessibility engineer. I didn't even know that was a thing! Add edX's Introduction to Accessibility course to the mix, and I was totally inspired.

Maybe I could build something. Accessibility tools help loads of people. Just look at how many people make use of captions! And if a program could understand what was going on in the game, what else could it do?

And with that, I decided to try my hand at what I decided to call Project EyeZard.

The Python Prototypes

It was after midnight on what was then technically April 7, 2022 that I took my first steps with OpenCV in Python. OpenCV is an open source computer vision library (thus the "Open + C + V" name). It has all sorts of tools to allow a computer to manipulate and extract data from images, including video. You can do things like facial detection, object tracking, and more.

I started with trying to match a Groudon sprite to an opponent's team in a screenshot I took from a VGC battle.

Life swept me away after that. It wouldn't be until August 8th that I came back, but it was with a renewed fire. I looked over my code from April to refresh myself on how all of that worked. I didn't know everything in April and I still didn't in August, so I kept looking at examples. This led me to work with SIFT, which is a feature-matching algorithm/method. Feature matching is when you break images down into notable features (called descriptors) and compare descriptors between two images. SIFT found the features, and then FLANN found the matches. I'll talk more about those two (and why they actually weren't the best choice) in a future post.

Then, I put together a plan. I spent the next month creating folders of different mini prototypes for different parts. I also created sprite sheets of Pokémon mini sprites for team preview and their names in different languages that didn't use Latin characters.

- August 9: I generated a sprite sheet to work with from the Pokémon Sprite Generator on GitHub. I was able to load up each sprite and find which was the matching sprite (based on which one had the best results). This was very slow to do for a team of 6 Pokémon, but it worked.

- August 10: I redid a comparison of results from SIFT, AKAZE, KAZE, and ORB. I couldn't get any results with anything other than SIFT, which I was already using, so I decided to keep using that. This may have to do with how small the sprites are.

- August 11: I started looking into how to do OCR and pull text from an image. Besides just reading English, I decided I wanted to be able to translate foreign language names into English, too. I thought this would make the tool helpful for a lot of people.

- I found Tesseract for OCR and read about how to use it to read multiple languages.

- I found people sharing that Fontworks' FOT-UDKakugoC80Pro-DB and FOT-RodinNTLGPro-DB fonts are what's used for English and Japanese respectively in Pokémon Sword and Shield.

- The fonts used for Korean, Simplified Chinese, and Traditional Chinese might be the Nintendo Switch built-in fonts. These might be a custom UD (Universal Design) font by the company Morisawa. I may not be able to find these anywhere to work with.

- August 12

- I started my first tests with Tesseract, which struggled to read miniature Japanese characters, such as the mini "tsu" (ッ) and mini "yu" (ュ) in "Mimikyu" (ミミッキュ). The best I could manage had the miniature characters treated as their regular-sized counterparts.

- I found out that Apple doesn't permit the use of its TTS voices for performance or non-personal usage (though Microsoft seems to be fine with it). This sounded like a potential copyright claim issue for any attempt of me showing my work, so I looked to Google TTS and Amazon Polly.

- August 13: Figured out how to calculate HP bar percentage by using thresholding.

- August 14-20:

- I generated "sprite sheets" of Pokémon names in Japanese, Korean, Simplified Chinese, and Traditional Chinese to match against the in-game text. This way, if Tesseract failed, I had another trick.

- I tried to improve Tesseract's reading of text by using the thresholding I learned about on the game text image and inverting the colors so the text is black characters on a white background. This seemed to help somewhat with non-Latin characters. Granted, it still had problems, but it did fix some errors I ran into trying to read Latin characters.

- August 21: I used a combination of Python and terminal commands with Mac's built-in

screencaptureto grab screenshots from my Elgato HD 60's Game Capture HD program. - August 22:

- I set up TTS with Amazon Polly, which required me to set up a separate thread and subprocess for it to run without stalling the rest of the program.

- Because the text in the main text box in Pokémon games is gradually revealed, I had to figure out how to tell when the text was complete or not. I checked for exclamation points, ellipses, and some particular exceptions.

- August 23: I created a system for reading non-Latin foreign Pokémon names. Using my non-Latin Pokémon name sprite sheets, I was able to get it to work (and work quickly) by feeding in the names of the 12 Pokémon in play. Then, if the OCR ran into a non-Latin word (where it would have low confidence), it could check if that word matched any names for the 12. First, it would use multilingual OCR. If that failed (and it usually did for non-Latin characters), it would compare against their names as sprites in the various languages using SIFT.

The video above shows my August 23rd prototype for using TTS with the battle text box, including translating non-English names into English. It's definitely not perfect, but it makes me smile.

Building with JavaScript

Once I was able to get that final prototype idea working, I knew I was ready to move from Python to JavaScript.

Why do that? I wanted to get this working in a browser. While my capture card wouldn't appear as a camera/video option on my computer, it would for many others. And a phone camera could be used in the same way.

I want this to work for other people. I want this to work on phones. And I want this to work as universally across devices as possible.

- August 25-28: I got OpenCV to work as I needed it to in Nodejs.

- I had to build my own version of OpenCV.js to get SIFT and FLANN to work (though I could technically get FLANN working with the default version already, albeit in a roundabout way).

- After figuring out how to convert images to grayscale, I was getting the same results with my Nodejs code as the equivalent Python code.

However, a lot of life things began to happen in August that increasingly took up my time as the weeks went on. My progress slowed to a crawl, but I still made progress when I could.

- September 3: After about a week, I finished creating a JSON that maps every Pokémon mini sprite to its species name and form.

- September 11: Figured out how to get Nodejs to call the command line for grabbing screenshots.

- October 18

- I was having trouble getting accurate team preview matches in some cases. (For instance, Amoonguss was being labeled as Minior.) The solution was to upscale the sprite sheet to be twice the size, making the sprites the same size as the sprites in the game screenshot at 1080p.

- I debuted Project EyeZard on Twitch.

The video above is a clip of me streaming the JavaScript version of Project EyeZard, which could only read out team preview.

Going Forward

With the release of Pokémon Scarlet and Violet on the horizon, I am even more pumped to work on this project. I also want to go into more detail on what I've done and the problems I've had to solve. There were a lot of things I've had to figure out that I'd like to share with the world.

I don't know how far I'll get or what I'll ultimately be able to do. Whatever happens, I look forward to the adventure.